Apple’s Vision Pro ‘Spatial Computer’ and companion VisionOS give us much to be hopeful about. With this WWDC sneak peek – what does the Apple Vision Pro mean for healthcare?

Level Ex is the flagship developer of medical content that showcases what’s possible on latest consumer devices. We’ve built the first clinician-focused medical content on Magic Leap 2, Oculus, and HTC Vive –- as well as launch content for Apple’s and Google’s mobile AR technologies: ARkit and ARCore.

Here is our take:

The Good:

Critical Mass is…Critical

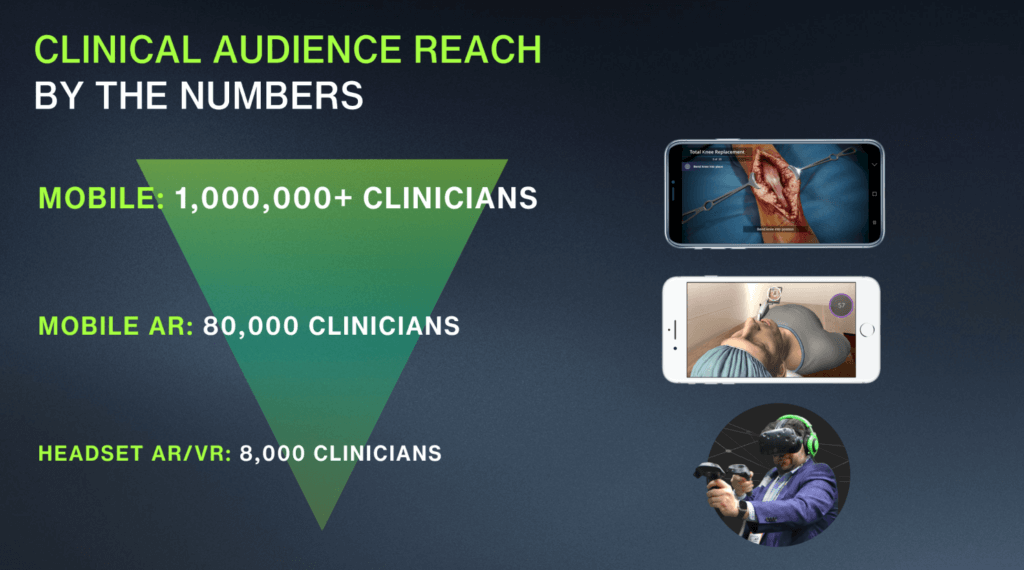

With over 3M downloads, Level Ex has had 1M+ medical professionals play our games on mobile. Over 70,000 clinicians have played our mobile medical AR games. Roughly 7,000 have played our VR and AR headset experiences.

What’s the lesson here? In 2023, embracing VR/AR headsets still means sacrificing reach by two orders-of-magnitude. Apple’s entry into the market could change that trajectory in 2024-2025.

If anyone knows how to reinvigorate a consumer base and drive mainstream adoption of a plateauing modality (smartphones, tablets, etc.) it’s Apple. If Vision Pro (and its follow-on products) form a meaningful percentage of Apple’s next billion devices in-market, headsets would finally become pervasive. Doctors are consumers too. Once the majority of medical professionals have personal headsets, earning CME while practicing surgery or virtual patient interactions will finally become commonplace.

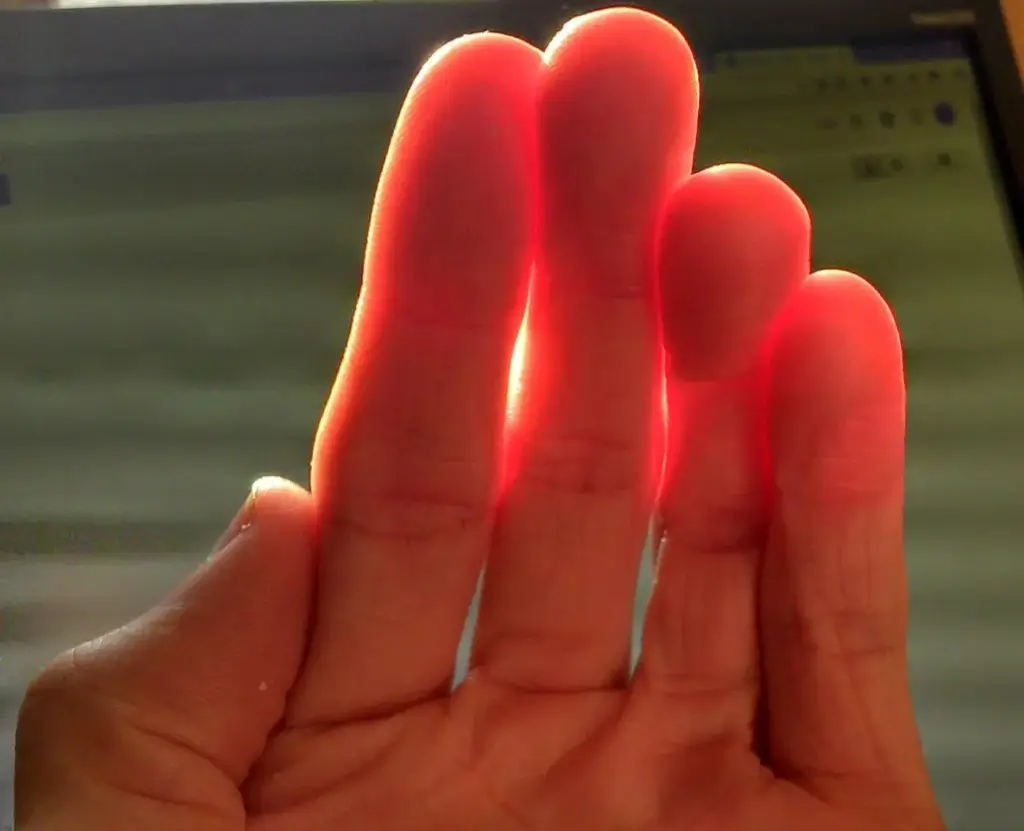

This’ll Fit Right In

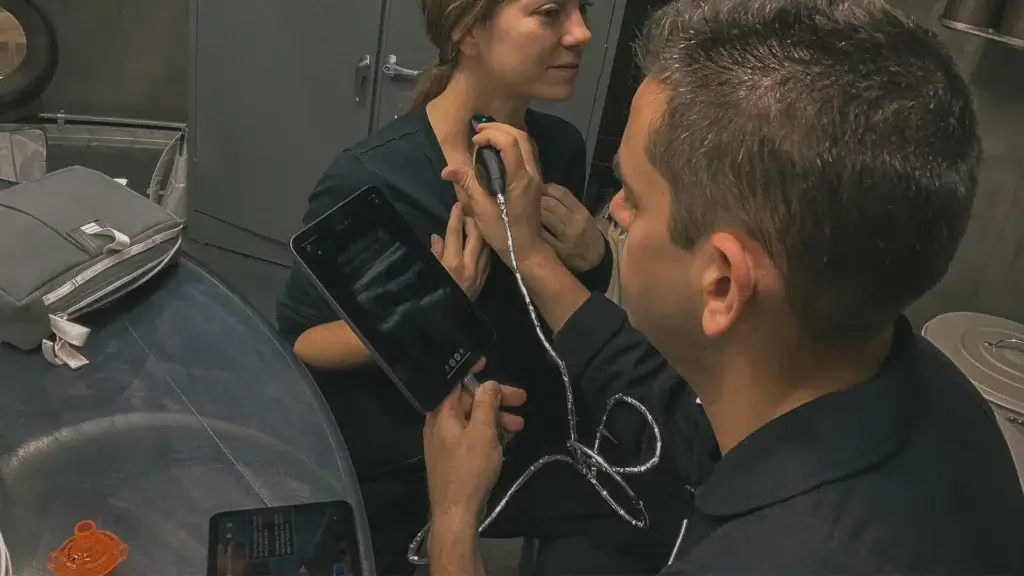

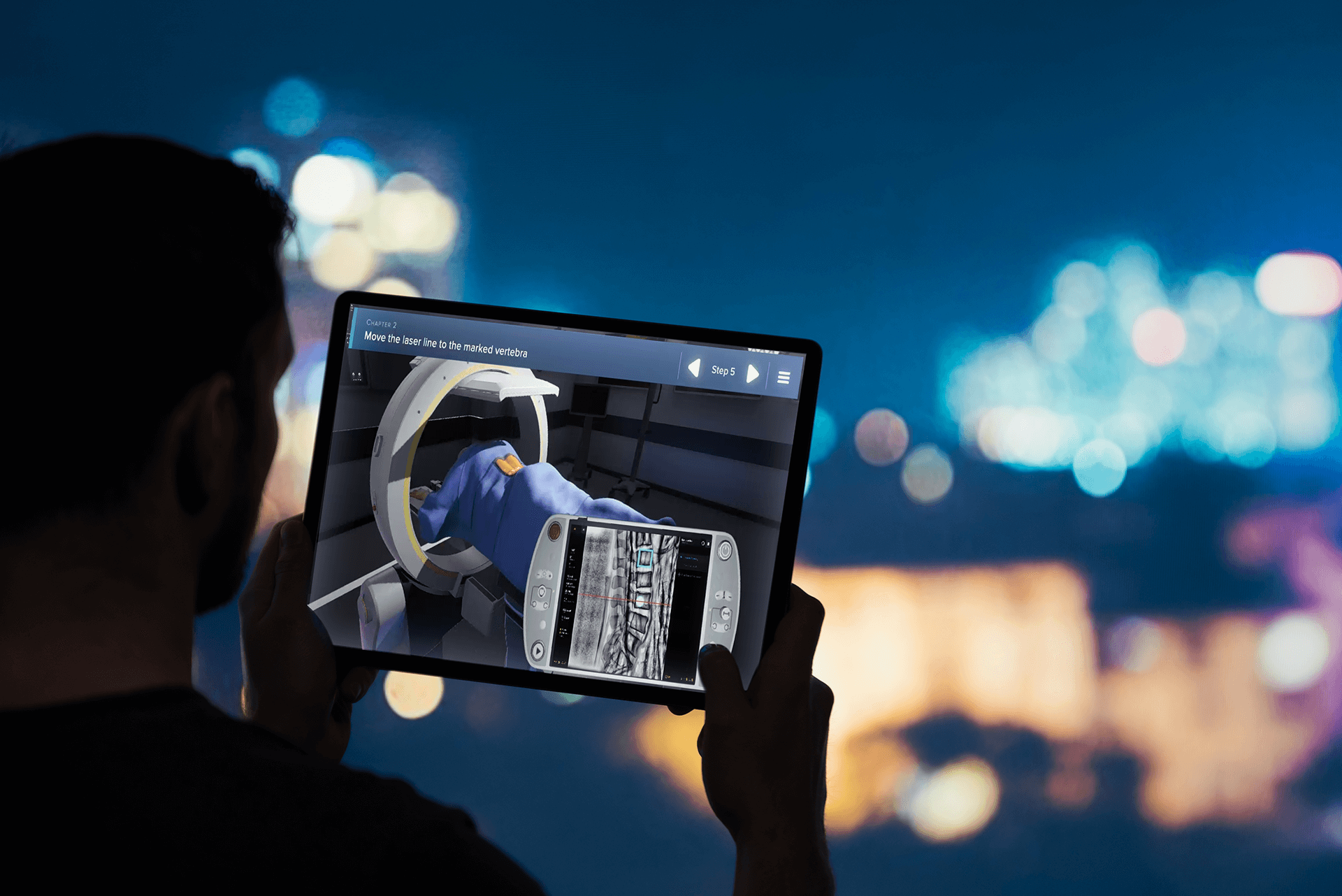

Vision Pro features a number of capabilities that we know from experience (on other devices) are a benefit to healthcare training applications. Vision Pro’s support for Apple’s existing ARkit API means cross-compatibility with iPhone for AR content –- and support for reliable, medical-grade augmented reality. ARkit was the first mainstream AR platform to capture the surrounding lighting environment and provide it as input to the application, – allowing us to place virtual patients into the real world in ways that matter: What does it look like to try to intubate a patient on the floor of a dark room in an emergency scenario? Turn the lights off and find out.

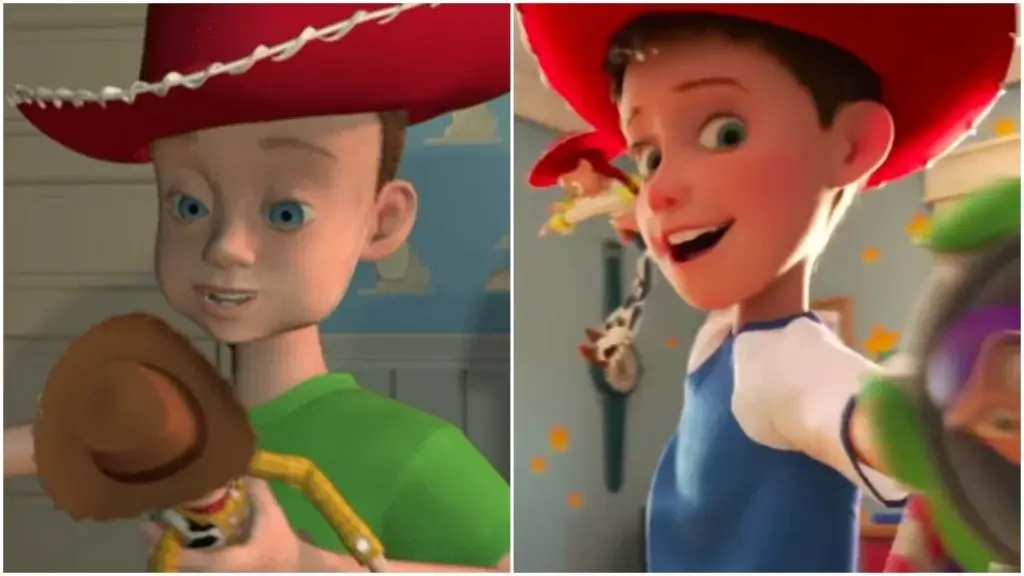

Intubating a virtual patient using ARkit in Level Ex’s Airway Ex app in a minigame sponsored by Medtronic. ARkit will be fully supported on Vision Pro.

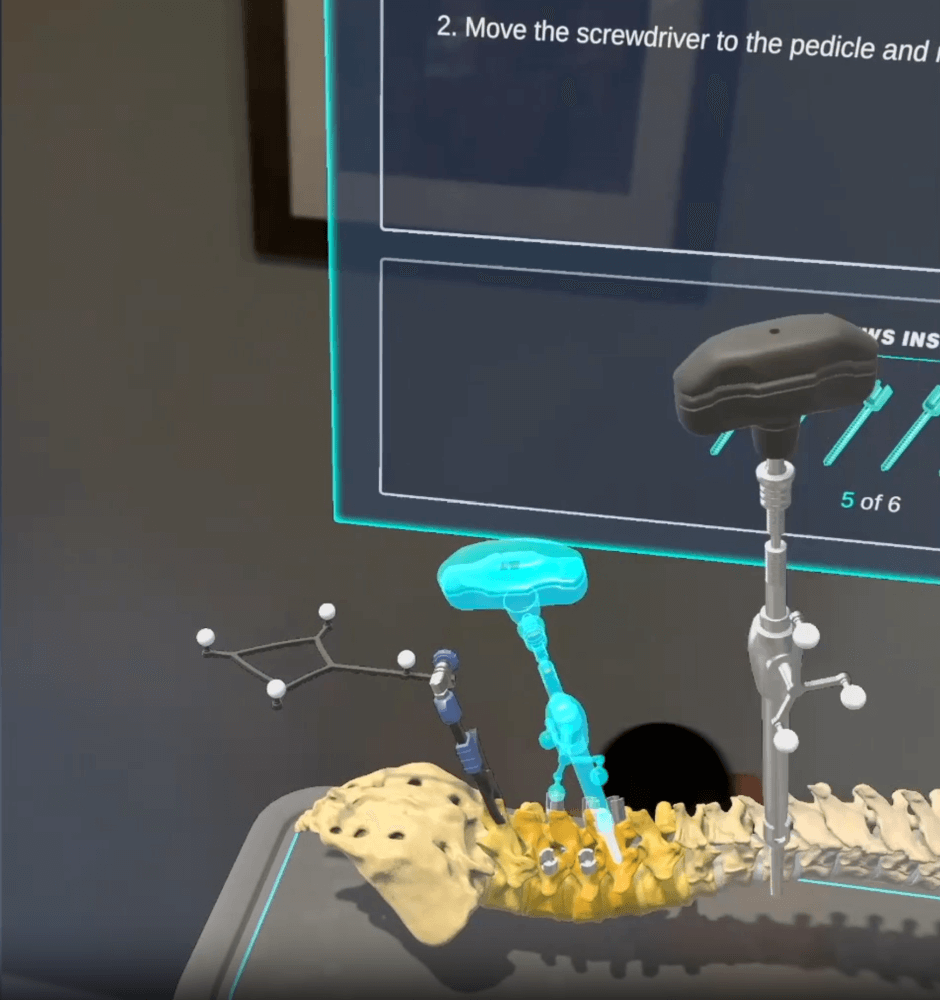

We’ve learned from experience with Magic Leap 2 about the benefits of ‘local occlusion’ in augmented reality applications.

Local Occlusion on Magic Leap 2 – Also possible on Vision Pro

Local occlusion means that you can project a virtual object into the real world, and that object will appear opaque. While Vision Pro takes a different approach to achieve this effect ( VP does it in software while ML2 does it using optical semiconductor magic) – – it’s the result that matters. Previous-gen AR was limited in that everything it projected into the real world was semi-transparent. In medical scenarios, tools are occluded. Your hand may be occluded. Recreating this occlusion is important for training scenarios: human and otherwise. Computer vision applications in the O.R. are often confounded by this occlusion.

Privacy is a Big Deal in Healthcare

Privacy is a big deal in healthcare, and Apple’s focus on privacy as a differentiator is a meaningful selling proposition.

If you’re concerned that looking at someone’s eyes through this thing is weird, just remember that it’s less awkward than talking to your doctor while he looks down at his computer screen to type into the EHR during your entire visit.

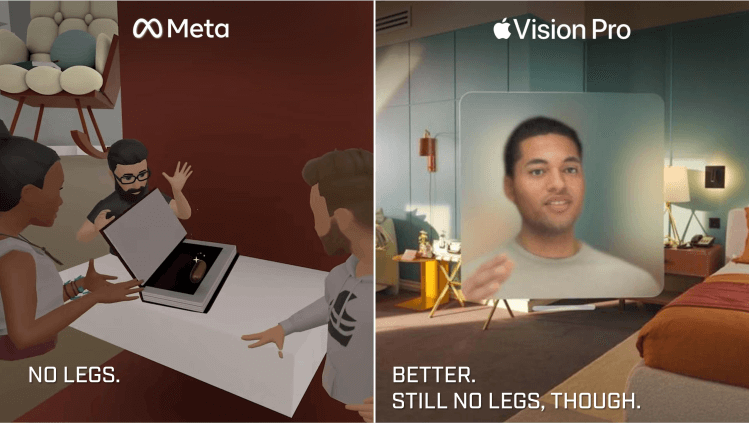

Apple’s App Universe is More Compelling Than Meta’s Lame Metaverse

I’ll take 100,000 iOS apps over metaverse hype any day.

I’ve commented many times about how Meta’s Metaverse vision (and implementation) lacks any real enduring value.

Who cares about buying pricey virtual Nike shoes and artificial real estate for your cheesy digital twin when there’s nothing compelling to do with it?

This is where Apple provides an especially stark contrast. Apple’s bringing its existing ecosystem to the table, and the value is palpable: Facetime. Apple Arcade. Media. Safari. All with meaningful advantages on the platform. Plus easy portability from other platforms for developers.

Resolution is Key

We’ve created several VR and AR medical games that feature medical devices running software inside the game. Making UI text readable (and usable) on headsets can be a real challenge. Apple seems to be thinking about this the right way – being very aggressive about pixel density (64X denser than iPhone) and offering features like foveated rendering.

Fingers crossed, however… because Apple has yet to reveal how these pixels are actually spread out by the optics. Hence…

The Disappointing

What Apple (Deliberately?) Withheld

Apple conspicuously left out the two most important tech specs in their reveal: What is the field of view and how much does it weigh?

Mass and volume. The basics.

There are anecdotes of executives saying it’s a ‘limitless’ field of view but that doesn’t…compute. My eyeballs don’t have a ‘limitless’ field of view inside my eyelids. This is a key stat that wasn’t shared, which triggers some skepticism.. A narrow field-of-view limits usability in general. In healthcare, it limits the ability to recreate complex procedural scenarios where the challenge lies in operating in your 3-dimensional environment: positioning yourself correctly relative to the patient and the surgical field, maintaining situational awareness across the various monitors, and coordinating with other people in the room.

As for the weight – they didn’t provide that either. WWDC developers could see the devices on a table but weren’t allowed to touch them… lest the forces imbued reveal the device’s top-secret weight. If this thing is anything less than featherweight it’s a barrier to broad adoption. It’s still a boon for medical training, which often takes place in 10-60 minute intervals.

6/8 Update: Early-access journalists who tried the Vision Pro reported discomfort after usage due to the weight.

In the history of Apple’s “keynote reveals” – I don’t think there’s ever been a device announcement that didn’t include how much the device weighs on Earth.

Some Disappointing “Firsts”

In addition to the “Mass Mystery”, the Vision Pro marked a number of ‘firsts’ for Apple’s keynote announcements – none of them good. Vision Pro marks the first Apple product that I can remember where:

- The presenter wasn’t wearing/holding/using the device. Seriously – not a single presenter was shown wearing it.

- The device had a two-hour battery life. You can’t even watch Avatar on the external battery.

- The product isn’t going to be available within 6 months. Typically Apple’s June/September announcements are for products that are imminently available for back-to-school or holiday. Not this time.

And that head strap – as comfortable as it looks – doesn’t appear especially sterilization-friendly, making us nervous about potential approvals for use in medical and surgical practice.

Luckily, the strap isn’t so much an issue for medical training, which stands to benefit substantially from Apple’s remarkable entrance into the AR/VR market.

In Conclusion

As an Apple user and developer, I want a Vision Pro. I’ve been eyeing to purchase a personal set of AR glasses to give myself a virtual widescreen monitor on airplanes (I spend a lot of time on airplanes), and this offers so much more.

As more is revealed about Vision Pro, let’s hope Apple will give medical AR/VR some solid legs to stand on in 2024.

This article was written by Level Ex CEO Sam Glassenberg and originally featured on LinkedIn

Read original article.

Follow Sam on LinkedIn to learn more about advancing medicine through videogame technology and design